Once data has been gathered, the researcher must analyze and interpret it. However, people are prone to certain tendencies and biases that must be avoided when making inferences about findings. When solving problems or reasoning, people often make use of certain heuristics, or learning shortcuts. These mental shortcuts, which are often influenced by cognitive biases, save time and energy but cause errors in reasoning.

Psychological Heuristics

There are several types of heuristics used to save time when drawing conclusions about large amounts of information, including availability, representativeness, and similarity heuristics.

Availability Heuristic

An availability heuristic involves estimating how common an event is based on how easily we can remember the event occurring previously. Things that are more easily remembered are thought to be more common than things that are not easily recalled. The availability heuristic leads to people overestimating the occurrence of situations they are familiar with.

An example of this is when we incorrectly believe that "spectacular" occurrences (like deaths caused by severe weather) happen more often than regular ones (like deaths caused by disease). Since the media covers these "spectacular" occurrences more often, and with more emphasis, they become more available to our memory. In the same way, vivid or exceptional outcomes will be perceived as more likely than those that are harder to picture, or are difficult to understand.

Consider the following research example. A researcher is conducting a clinical study that requires her to screen participants for mental illnesses. This researcher has recently read about and heard news stories on antisocial personality disorder. As a result, she ends up incorrectly flagging several participants in the sample as having antisocial personality disorder, when in reality, this mental illness is quite infrequent in the general population.

Representativeness Heuristic

We use the representativeness heuristic when we make judgments about the probability of an event under uncertainty. When people rely on representativeness to make judgments, they are likely to judge incorrectly because the fact that something is more representative does not make it more likely. People tend to overestimate the ability of an event's representativeness to accurately predict the likelihood of that event.

For example, you are conducting a study in which you are examining the occurrence of men versus women who choose careers as scientists. Based on the schemas you hold about men most often being in science—what is most representative in your mind when you think of a typical scientist—you predict that most scientists will be male. However, in reality, there is a roughly 50% chance that a scientist will be a woman rather than a man.

Similarity Heuristic

We use the similarity heuristic to account for how people make judgments based on the similarity between current situations and other situations. This occurs when we base decisions on favorable versus unfavorable experiences, and how the present seems similar to the past. This heuristic involves making choices based on how similar a current situation is compared to a previous experience.

We rely on the similarity heuristic all the time when making decisions. For example, if someone enjoys a book by John Irving, they may generalize that to assume that they would also enjoy other books by John Irving, and they will be more likely to read another. Much of the time this is helpful and saves us time in making decisions.

However, this heuristic can introduce bias in research, in which it is by definition important to remain an objective observer. For example, a researcher may unconsciously draw the same conclusions as what was found in studies that followed similar methods, precisely because their study is similar to those former studies. Although sometimes this assumption may accurately reflect the data (results do frequently replicate across studies), sometimes this bias can lead the researcher to exclude other, valid interpretations.

Cognitive Biases

Cognitive biases are another factor that can lead researchers to make incorrect inferences when analyzing data. A cognitive bias is the mind's tendency to come to incorrect conclusions based on a variety of factors.

The illusory correlation bias is our predisposition to perceive a relationship between variables (typically people, events, or behaviors) even when no such relationship exists. A common example of this phenomenon would be when people form false associations between membership in a statistical minority group and rare (typically negative) behaviors. This is one way stereotypes form and endure. Hamilton & Rose (1980) found that stereotypes can lead people to expect certain groups and traits to fit together, and then to overestimate the frequency with which these correlations actually occur.

Hindsight bias is a false memory of having predicted events, or an exaggeration of actual predictions, after becoming aware of the outcome. This is the moment after something occurs where we look back and say, "I saw that coming," or look back and put all the signs and pieces together which led to the eventual outcome. Hindsight bias occurs in psychological research when researchers form "post hoc hypotheses." When a researcher obtains a certain result that is counter to what he or she originally predicted, the researcher may use post hoc hypotheses to revise their prediction to fit the actual, obtained result.

The confirmation bias leads to the tendency to search for, or interpret, information in a way that confirms one's existing beliefs. This occurs when we look only for information that affirms what we already believe to be true. Confirmation bias is especially dangerous in psychological research. If a researcher has a particular hypothesis in mind, he or she may look for patterns in the data that support that hypothesis, while ignoring other important patterns that oppose it.

Apophenia is the experience of seeing meaningful patterns or connections in random or meaningless data. This is a person's tendency to seek patterns in random information. Researchers make this mistake when they obtain mostly null results (results that do not support their hypothesis), and compensate by exaggerating or magnifying any pattern they do find. In reality, statistically meaningless data or null findings are common, which is why researchers typically conduct multiple studies to examine their research questions.

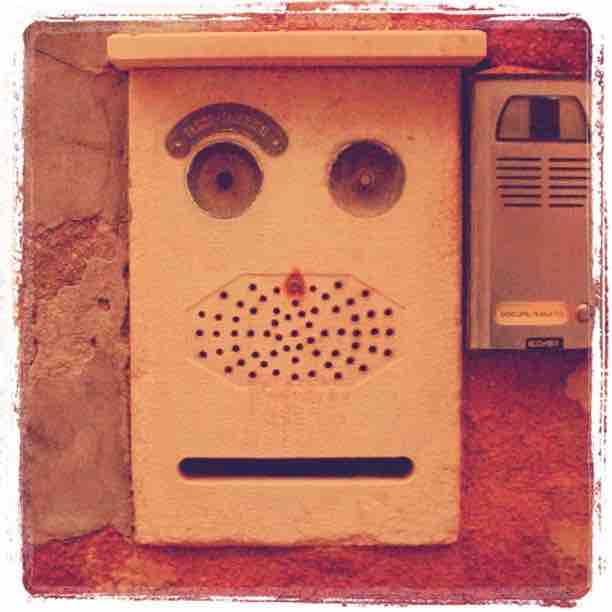

Pareidolia is when a vague and random stimulus is perceived as significant when it is not. This occurs when we see images of animals or other things in the clouds, the Man on the Moon or the face on Mars, hear hidden messages in songs when played in reverse, or give inanimate objects qualities that make them seem human.

Pareidolia

Our minds trick us into believing something exists when it doesn't. It tricks us into seeing a pattern or likeness, in something that is relatively meaningless. People tend to see a face raising an eyebrow when they look at this intercom system.