Our concept of intelligence has evolved over time, and intelligence tests have evolved along with it. Researchers continually seek ways to measure intelligence more accurately.

History of Intelligence Testing

The abbreviation "IQ" comes from the term intelligence quotient, first coined by the German psychologist William Stern in the early 1900s (from the German Intelligenz-Quotient). This term was later used in 1905 by Alfred Binet and Theodore Simon, who published the first modern intelligence test, the Binet-Simon intelligence scale. Because it was easy to administer, the Binet-Simon scale was adopted for use in many other countries.

These practices eventually made their way to the United States, where psychologist Lewis Terman of Stanford University adapted them for American use. He created and published the first IQ test in the United States, the Stanford-Binet IQ test. He proposed that an individual's intelligence level be measured as a quotient (hence the term "intelligence quotient") of their estimated mental age divided by their chronological age. A child's "mental age" was the age of the group which had a mean score that matched the child's score. So if a five year-old child achieved at the same level as an average eight year-old, he or she would have a mental age of eight. The original formula for the quotient was Mental Age/Chronological Age x 100. Thus, a five year-old child who achieved at the same level as his five year-old peers would score a 100. The score of 100 became the average score, and is still used today.

Wechsler Adult Intelligence Scale

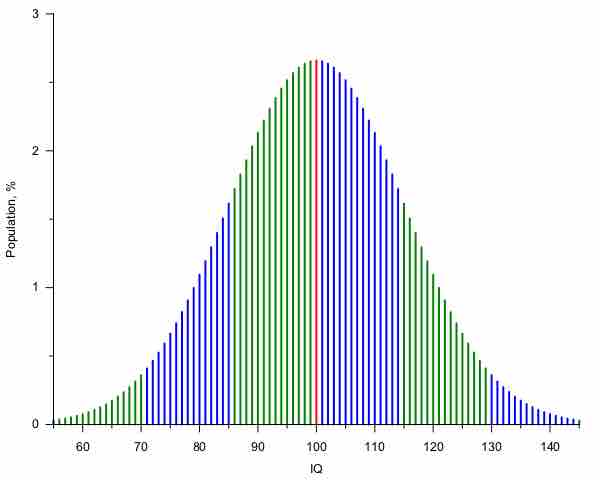

In 1939, David Wechsler published the first intelligence test explicitly designed for an adult population, known as the Wechsler Adult Intelligence Scale, or WAIS. After the WAIS was published, Wechsler extended his scale for younger people, creating the Wechsler Intelligence Scale for Children, or WISC. The Wechsler scales contained separate subscores for verbal IQ and performance IQ, and were thus less dependent on overall verbal ability than early versions of the Stanford-Binet scale. The Wechsler scales were the first intelligence scales to base scores on a standardized bell curve (a type of graph in which there are an equal number of scores on either side of the average, where most scores are around the average and very few scores are far away from the average).

Modern IQ tests now measure a very specific mathematical score based on a bell curve, with a majority of people scoring the average and correspondingly smaller amounts of people at points higher or lower than the average. Approximately 95% of the population scores between 70 and 130 points. However, the relationship between IQ score and mental ability is not linear: a person with a score of 50 does not have half the mental ability of a person with a score of 100.

IQ Curve

The bell shaped curve for IQ scores has an average value of 100.

General Intelligence Factor

Charles Spearman was the pioneer of the theory that underlying disparate cognitive tasks is a single general intelligence factor or which he called g. In the normal population, g and IQ are roughly 90% correlated. This strong correlation means that if you know someone's IQ score, you can use that with a high level of accuracy to predict their g, and vice versa. As a result, the two terms are often used interchangeably.

Culture-Fair Tests

In order to develop an IQ test that separated environmental from genetic factors, Raymond B. Cattell created the Culture-Fair Intelligence Test. Cattell argued that general intelligence g exists and that it consists of two parts: fluid intelligence (the capacity to think logically and solve problems in novel situations) and crystallized intelligence (the ability to use skills, knowledge, and experience). He further argued that g should be free of cultural bias such as differences in language and education type. This idea, however, is still controversial.

Another supposedly culture-fair test is Raven's Progressive Matrices, developed by John C. Raven in 1936. This test is a nonverbal group test typically used in educational settings, designed to measure the reasoning ability associated with g.

The Flynn Effect

During the early years of research, the average score on IQ tests rose throughout the world. This increase is now called the "Flynn effect," named after Jim Flynn, who did much of the work to document and promote awareness of this phenomenon and its implications. Because of the Flynn effect, IQ tests are recalibrated every few years to keep the average score at 100; as a result, someone who scored a 100 in the year 1950 would receive a lower score on today's test.