Discrete Random Variable

A discrete random variable

- Every probability

$p_i$ is a number between 0 and 1. - The sum of the probabilities is 1:

$p_1+p_2+\dots + p_i = 1$ .

Expected Value Definition

In probability theory, the expected value (or expectation, mathematical expectation, EV, mean, or first moment) of a random variable is the weighted average of all possible values that this random variable can take on. The weights used in computing this average are probabilities in the case of a discrete random variable.

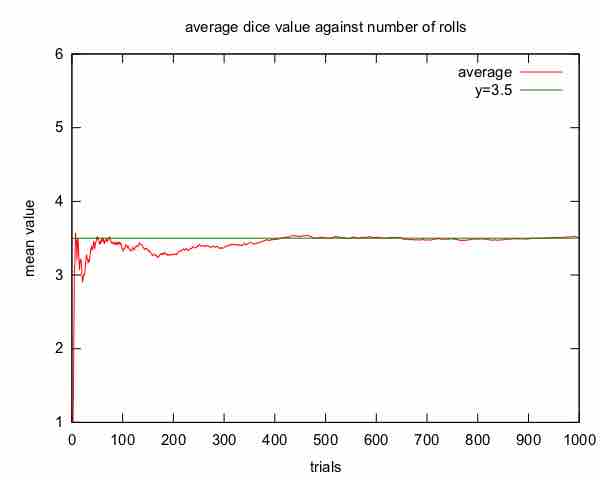

The expected value may be intuitively understood by the law of large numbers: the expected value, when it exists, is almost surely the limit of the sample mean as sample size grows to infinity. More informally, it can be interpreted as the long-run average of the results of many independent repetitions of an experiment (e.g. a dice roll). The value may not be expected in the ordinary sense—the "expected value" itself may be unlikely or even impossible (such as having 2.5 children), as is also the case with the sample mean.

How To Calculate Expected Value

Suppose random variable

If all outcomes

For example, let

Average Dice Value Against Number of Rolls

An illustration of the convergence of sequence averages of rolls of a die to the expected value of 3.5 as the number of rolls (trials) grows.