A neural network (or neural pathway) is the interface through which neurons communicate with one another. These networks consist of a series of interconnected neurons whose activation sends a signal or impulse across the body.

Neural networks

A neural network (or neural pathway) is the complex interface through which neurons communicate with one another.

The Structure of Neural Networks

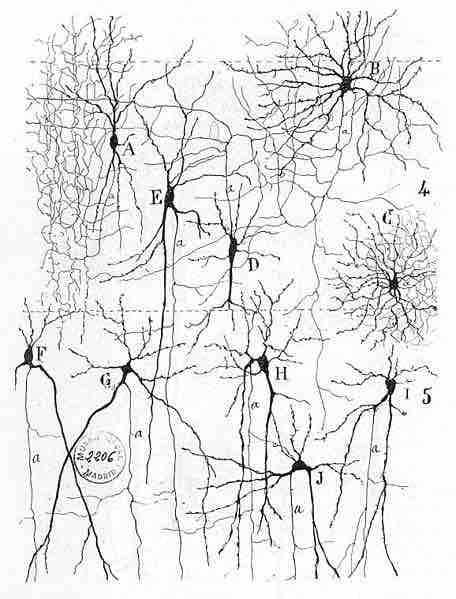

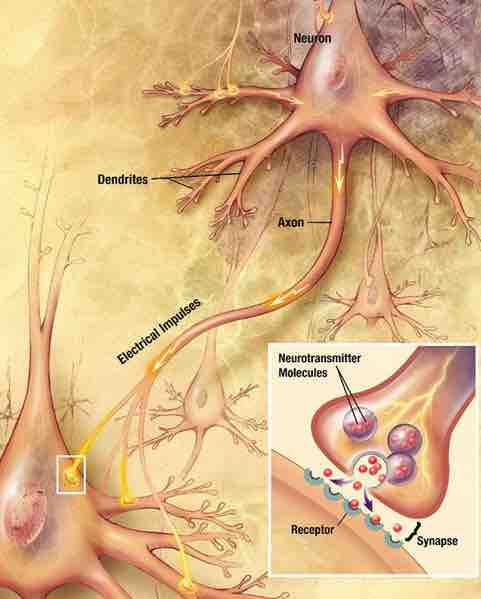

The connections between neurons form a highly complex network. The basic kinds of connections between neurons are chemical synapses and electrical gap junctions, through which either chemical or electrical impulses are communicated between neurons. The method through which neurons interact with neighboring neurons usually consists of several axon terminals connecting through synapses to the dendrites on other neurons.

If a stimulus creates a strong enough input signal in a nerve cell, the neuron sends an action potential and transmits this signal along its axon. The axon of a nerve cell is responsible for transmitting information over a relatively long distance, and so most neural pathways are made up of axons. Some axons are encased in a lipid-coated myelin sheath, making them appear a bright white; others that lack myelin sheaths (i.e., are unmyelinated) appear a darker beige color, which is generally called gray.

The process of synaptic transmission in neurons

Neurons interact with other neurons by sending a signal, or impulse, along their axon and across a synapse to the dendrites of a neighboring neuron.

Some neurons are responsible for conveying information over long distances. For example, motor neurons, which travel from the spinal cord to the muscle, can have axons up to a meter in length in humans. The longest axon in the human body is almost two meters long in tall individuals and runs from the big toe to the medulla oblongata of the brain stem.

The Capacity of Neural Networks

The basic neuronal function of sending signals to other cells includes the capability for neurons to exchange signals with each other. Networks formed by interconnected groups of neurons are capable of a wide variety of functions, including feature detection, pattern generation, and timing. In fact, it is difficult to assign limits to the types of information processing that can be carried out by neural networks. Given that individual neurons can generate complex temporal patterns of activity independently, the range of capabilities possible for even small groups of neurons are beyond current understanding. However, we do know that we have neural networks to thank for much of our higher cognitive functioning.

Behaviorist Approach

Historically, the predominant view of the function of the nervous system was as a stimulus-response associator. In this conception, neural processing begins with stimuli that activate sensory neurons, producing signals that propagate through chains of connections in the spinal cord and brain, giving rise eventually to activation of motor neurons and thereby to muscle contraction or other overt responses. Charles Sherrington, in his influential 1906 book The Integrative Action of the Nervous System, developed the concept of stimulus-response mechanisms in much more detail, and behaviorism, the school of thought that dominated psychology through the middle of the 20th century, attempted to explain every aspect of human behavior in stimulus-response terms.

Hybrid Approach

However, experimental studies of electrophysiology, beginning in the early 20th century and reaching high productivity by the 1940s, showed that the nervous system contains many mechanisms for generating patterns of activity intrinsically—without requiring an external stimulus. Neurons were found to be capable of producing regular sequences of action potentials ("firing") even in complete isolation. When intrinsically active neurons are connected to each other in complex circuits, the possibilities for generating intricate temporal patterns become far more extensive. A modern conception views the function of the nervous system partly in terms of stimulus-response chains, and partly in terms of intrinsically generated activity patterns; both types of activity interact with each other to generate the full repertoire of behavior.

Hebbian Theory

In 1949, neuroscientist Donald Hebb proposed that simultaneous activation of cells leads to pronounced increase in synaptic strength between those cells, a theory that is widely accepted today. Cell assembly, or Hebbian theory, asserts that "cells that fire together wire together," meaning neural networks can be created through associative experience and learning. Since Hebb's discovery, neuroscientists have continued to find evidence of plasticity and modification within neural networks.