Two Types of Errors

While conducting measurements in experiments, there are generally two different types of errors: random (or chance) errors and systematic (or biased) errors.

Every measurement has an inherent uncertainty. We therefore need to give some indication of the reliability of measurements and the uncertainties of the results calculated from these measurements. To better understand the outcome of experimental data, an estimate of the size of the systematic errors compared to the random errors should be considered. Random errors are due to the precision of the equipment , and systematic errors are due to how well the equipment was used or how well the experiment was controlled .

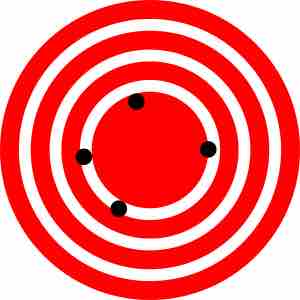

Low Accuracy, High Precision

This target shows an example of low accuracy (points are not close to center target) but high precision (points are close together). In this case, there is more systematic error than random error.

High Accuracy, Low Precision

This target shows an example of high accuracy (points are all close to center target) but low precision (points are not close together). In this case, there is more random error than systematic error.

Biased, or Systematic, Errors

Systematic errors are biases in measurement which lead to a situation wherein the mean of many separate measurements differs significantly from the actual value of the measured attribute. All measurements are prone to systematic errors, often of several different types. Sources of systematic errors may be imperfect calibration of measurement instruments, changes in the environment which interfere with the measurement process, and imperfect methods of observation.

A systematic error makes the measured value always smaller or larger than the true value, but not both. An experiment may involve more than one systematic error and these errors may nullify one another, but each alters the true value in one way only. Accuracy (or validity) is a measure of the systematic error. If an experiment is accurate or valid, then the systematic error is very small. Accuracy is a measure of how well an experiment measures what it was trying to measure. This is difficult to evaluate unless you have an idea of the expected value (e.g. a text book value or a calculated value from a data book). Compare your experimental value to the literature value. If it is within the margin of error for the random errors, then it is most likely that the systematic errors are smaller than the random errors. If it is larger, then you need to determine where the errors have occurred. When an accepted value is available for a result determined by experiment, the percent error can be calculated.

For example, consider an experimenter taking a reading of the time period of a pendulum's full swing. If their stop-watch or timer starts with 1 second on the clock, then all of their results will be off by 1 second. If the experimenter repeats this experiment twenty times (starting at 1 second each time), then there will be a percentage error in the calculated average of their results; the final result will be slightly larger than the true period.

Categories of Systematic Errors and How to Reduce Them

- Personal Errors: These errors are the result of ignorance, carelessness, prejudices, or physical limitations on the experimenter. This type of error can be greatly reduced if you are familiar with the experiment you are doing.

- Instrumental Errors: Instrumental errors are attributed to imperfections in the tools with which the analyst works. For example, volumetric equipment, such as burets, pipets, and volumetric flasks, frequently deliver or contain volumes slightly different from those indicated by their graduations. Calibration can eliminate this type of error.

- Method Errors: This type of error many times results when you do not consider how to control an experiment. For any experiment, ideally you should have only one manipulated (independent) variable. Many times this is very difficult to accomplish. The more variables you can control in an experiment, the fewer method errors you will have.